Qualcomm held a one-day event in San Diego, updating us all on their AI research. Pretty amazing stuff, but the big news is yet to come with a new Oryon-based Snapdragon expected this Fall, and perhaps a new Cloud AI 100 next year.

Qualcomm was quite confident last week about their AI edge live, well, off the edge. It’s strong and getting stronger. Most of what they covered here is known, or at best, we covered much of it here and elsewhere. But there were a few hints of some big updates coming.

Qualcomm AI: The bigger, the better, provided you can make it smaller.

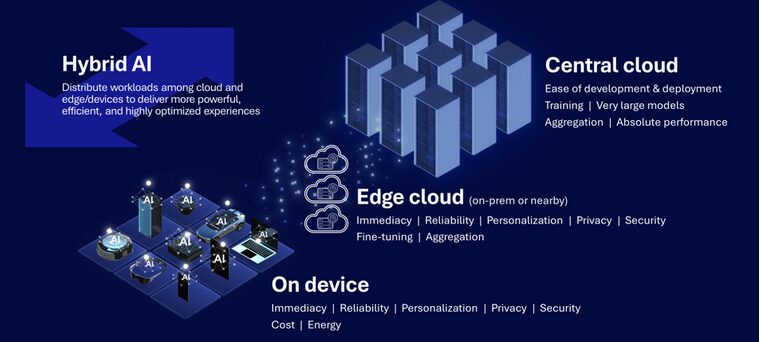

While the rush is on to make AI ever larger—with mixture of expert models breaking one trillion parameters—Qualcomm has been very busy squeezing these gargantuan models down so that they will fit on a mobile device, on a robot, or in a car. You can always fall back on the cloud if you have to do larger AI, they say. But the pot of gold you’re looking for is in your hand: your phone.

Five areas Qualcomm has invested in enable these massive models to slim down. Of course, most AI developers are acquainted with quantization and compression, but distillation is a relatively new and very cool field where a “student model” will imitate the bigger teacher model but run on a phone. And speculative decoding’s getting a lot of traction. Add it all up, and the small can be hell a lot cheaper than the massive models while still giving the quality needed.

Qualcomm showed a slide with data indicating that the same quality as this 175B parameter GPT 3.5 Turbo model could be done with an optimized 8B parameter Llama 3 model.

This entire AI is available on the Qualcomm AI Hub, which we covered here, to developers and will run really fast on Snapdragon 8 Gen 3 powered phones, spokespeople claimed. This tiny chip, they added, can do so on less electricity than an LED lightbulb, and generate AI imagery 30 times more efficiently than data center infrastructure in the cloud.

Qualcomm did confirm a fall announcement for the next step in Snapdragon SoCs and further iterated that these would be based on the same Oryon cores forming the basis of its laptop offering, snapdragon X Elite. Stay tuned!

The Data Center: Qualcomm is just getting started

Tachyum has a raft of recent wins, including nearly every server company and public clouds such as AWS. The Cloud AI 100 Ultra is also the chosen inference platform from Cerebras, the company that gave us the Wafer Scale Engine. NeuReality also chose the Cloud AI100 Ultra for its Deep Learning Accelerator in their CPU-less inference appliance.

It’s all for one simple reason: the Cloud AI 100 runs all the AI apps you might need at a fraction of the power consumption. And thanks in part to its larger on-card DRAM, the PCIe card can run models up to 100 billion parameters. Net-net: The Qualcomm Cloud AI 100 Ultra does deliver two to five times performance per dollar compared to a variety of competitors working on generative AI, LLMs, NLP, and computer vision workloads.

And for the first time we are aware of, a Qualcomm engineer confirmed that they are working on a new version of the AI100, most likely using the same Oryon cores as the X Elite, which they acquired when the company bought Nuvia. We think this third-generation of Qualcomm’s data center inference engine will be focused on generative AI. Ultra has established a solid foundation for Qualcomm, and the next generation platform could be a material added business for the company.

Automotive

Qualcomm stated only recently that its auto business “pipeline” had swelled to US$30 billion, certainly with the help of its Snapdragon Digital Chassis. Upwards of more than US$10 billion since its third quarter results were announced in last July, this is over twice the size of Nvidia’s auto pipeline, which the company disclosed for the first time to be some $14B in 2023.

Conclusions

We said recently that Qualcomm is becoming the juggernaut of AI at the edge, and last week’s session with Qualcomm executives nailed home our position. The company has been working on the AI-oriented research for more over a decade; at the time, since it had the presence of mind to know it could leverage AI to dispose at Apple. Now, it’s productizing that research in silicon and software and making it all available on the new AI Hub for developers. It also brings in revenue from new markets and diversifies the company from its roots in modems and Snapdragon mobile business with Automotive and Data Center inference processing. Finally, it has doubled down on its Nuvia bet despite the two-year-old ongoing litigation over licensing with Arm.